The Shodan database and web site, famous for identifying and cataloging the Internet for Industrial Control Systems and Internet of Things devices and systems, is now providing free tools to educational institutions. Shodan creator John Matherly says that “by making the information about what is on their [universities] network more accessible they will start fixing/ discussing some of the systemic issues.”

The .edu package includes over 100 export credits (for large data/report exports), access to the new Shodan maps feature which correlates results with geographical maps, and the Small Business API plan which provides programmatic access to the data (vs web access or exports).

It has been acknowledged that higher ed faces unique and substantial risks due in part to intellectual property derived from research and Personally Identifiable Information (PII) issues surrounding students, faculty, and staff. In fact, a recent report states that US higher education institutions are at higher risk of security breach than retail or healthcare. The FBI has documented multiple attack avenues on universities in their white paper, Higher Education and National Security: The Targeting of Sensitive, Proprietary and Classified Information on Campuses of Higher Education .

The openness and sharing and knowledge propagation mindset of universities can be a significant component of the risk that they face.

Data breaches at universities have clear financial and reputation impacts to the organization. Reputation damage at universities not only affects the ability to attract students, it also likely affects the ability of universities to recruit and retain high producing, highly visible faculty.

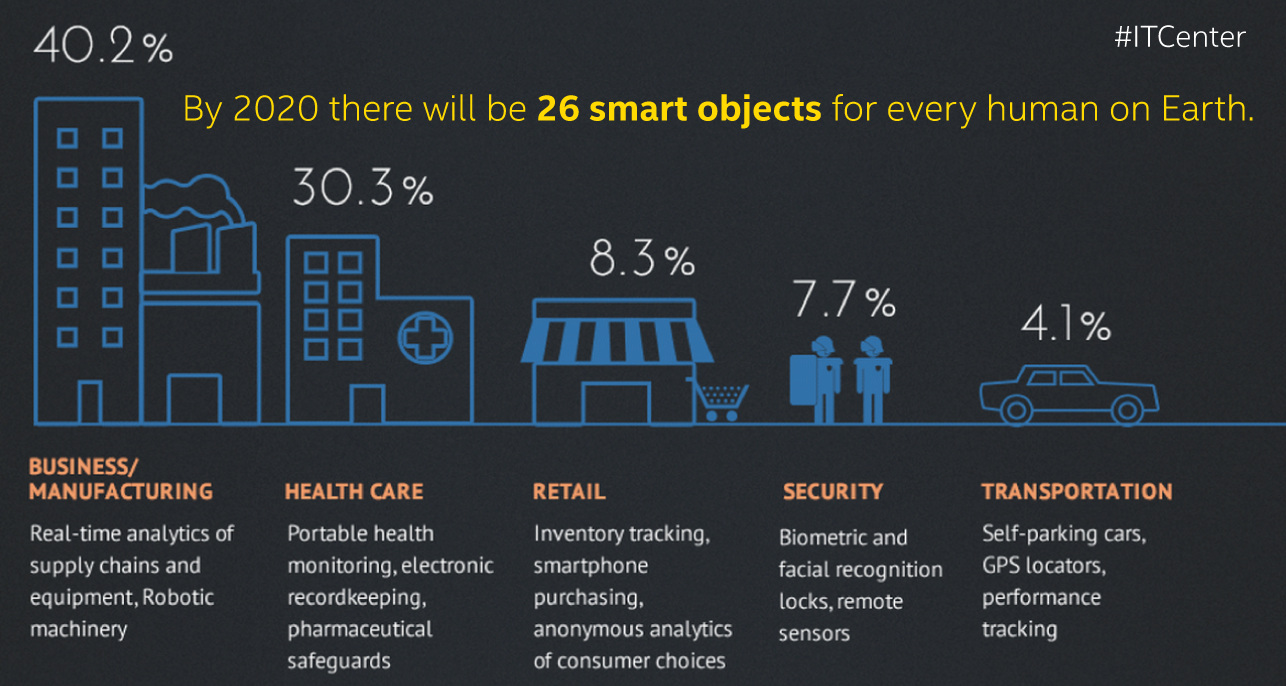

This realm of risk of Industrial Control Systems combined with Internet of Things is a rapidly growing and little understood sector of exposure for universities. In addition to research data and intellectual property, PII data from students, faculty, and staff, and PHI data if the university has a medical facility, universities can also be like small to medium sized cities. These ‘cities’ might provide electric, gas, and water services, run their own HVAC systems, fire alarm systems, building access systems and other ICS/IoT kinds of systems. As in other organizations, these can provide substantial points of attack for malicious actors.

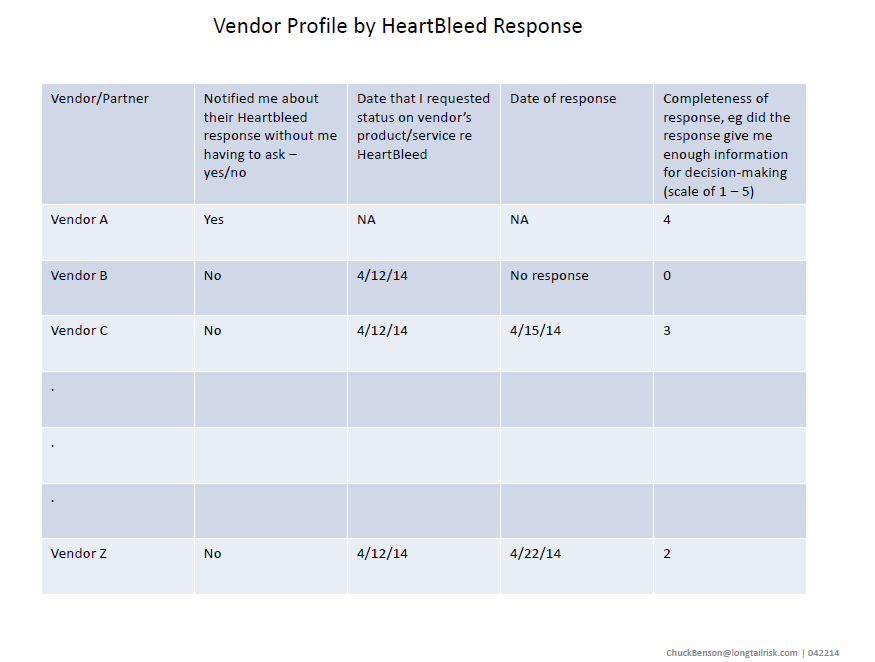

Use of tools such as Shodan to identify, analyze, prioritize, and develop mitigation plans are important for any higher education organization. Even if the resources are not immediately available to mitigate identified risk, at least university leadership knows it is there and has the opportunity to weigh that risk along with all of the other risks that universities face. We can rest assured that bad guys, whatever their respective motivations, are looking at exposure and attack avenues at higher education institutions — higher ed institutions might as well have the same information as the bad guys.