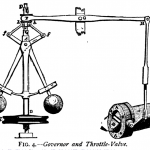

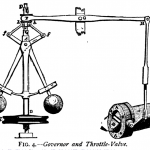

centrifugal governor

Probably one of the earliest papers written on control theory was James Clerk Maxwell’s, On Governors, published in 1868. In the paper, Maxwell documented the observation that too much control can have the opposite of the desired effect.

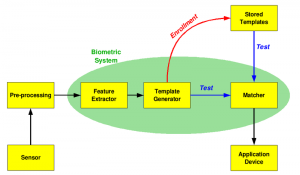

Compliance efforts, in general, and certainly in cybersecurity work, can be seen as a governing activity. The idea is that, without compliance activity, a business or government enterprise would have one or many components that operate to excess. This excess might manifest itself in financial loss, personal injury, damage to property, breaking laws, or otherwise creating (most likely unseen) risk to the customer base or citizenry. For example, a company that manages personally identifiable information as a part of its service has an obligation to take care of that data.

In moderation, regulatory compliance makes sense. Some mandated regulatory activities can build in a desirable safety cushion and lower risk to customers and citizens. The problem, of course, is what defines moderation?.

Compliance and regulatory rules are generally additive. One doesn’t often hear that an update to a regulation is going to remove rules, constraints, or restrictions. Updated regulatory law far more often adds regulation. More to do. More to do usually means more time, effort, and resources that have to come from somewhere if an organization is indeed to ‘be compliant’.

Tightening the screws

James Clerk Maxwell

Maxwell was a savant, making far-reaching contributions to math and the science of electromagnetism, electricity, and optics, and dying by the age of 48. Einstein described Maxwell’s work as “the most profound and the most fruitful that physics has experienced since the time of Newton.”

In his paper, On Governors, Maxwell wrote,

“If, by altering the adjustments of the machine, its governing power is continually increased, there is generally a limit at which the disturbance, instead of subsiding more rapidly, becomes an oscillating and jerking motion, increasing in violence till it reaches the limit of action of the governor.”

Maxwell’s observation of physical governors creating the opposite of intended behavior once a certain point of additional control has been added has other parallels in the natural world such as overcoached sports teams, overproduced record albums (showing my age here), or micromanaged business or military units. I believe there is also similar application of over-control in the world of compliance.

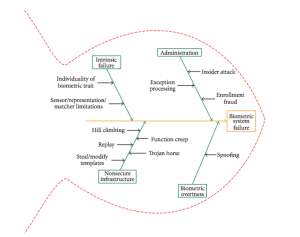

Often a system with no regulation operates inefficiently or creates often unseen risk to operators and bystanders. Adding a little bit of regulation can allow the system to run more smoothly, lower risk to those around or involved in the system, and allow the system to have a longer life. However, there is a point reached while adding more regulation where detrimental or even self-destructive effects can occur. In Maxwell’s example, the machine would “become an oscillating and jerking motion, increasing in violence” until the physical governor could no longer govern.

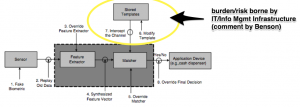

That critical zone of too many compliance mandates in business and government systems manifest themselves in undesirable behavior in a couple of ways. One is that the resources to comply with the additional regulations are simply not there. That is, not everything can be complied with if the business is to stay in business or the government process is to continue to function. That means that choices must then be made on what gets complied with. And that means that the entity that is supposedly being regulated is now, by necessity, making its own decisions — independent of regulators, whether directly or indirectly — on what it will comply with and what it will not comply with. That, in itself, is not providing particularly helpful control. Worse, the organization might choose not to do those early compliance rules that were actually helpful.

Another way that too much compliance can have unintended consequences on the system is that if the organization does actually try to comply with everything, redirecting as much of its operating resources as necessary, can in fact bleed itself out. It might go broke trying to comply.

This is not to say that some entities should be allowed to be in business if they don’t have a business model that allows them to support essential regulation. Again, there is some core level of regulation and compliance that serve the common good. However, there is a crossable point where innovation and new opportunity start to wash out because potentially innovative companies, that might add value to the greater good, cannot stay in the game.

“I used to do a little, but a little wouldn’t do it, so a little got more and more”

Axl, Izzy, Slash, Duff, & Steve on Mr. Brownstone from the album Appetite for Destruction, Guns N Roses

The line above, from the Guns N Roses song, Mr. Brownstone, comes to my mind sometimes when thinking about compliance excess, tongue in cheek or not. The song is a dark, frank, (and loud) reflection on addiction. The idea is that a little of something provided some short-term benefit, but then that little something wasn’t enough. That little something needed to be more and more and quickly became a process unto itself. Of course, I’m not saying that excessive compliance = heroin addiction, but both do illustrate systems gone awry.

So where is the stopping point for compliance, particularly in cybersecurity? When does that amount of regulatory activity, that previously was helpful, start to become too much?

I don’t know. And worse, there’s no great mechanism to determine that for all businesses and governments in all situations. However, I do believe that independent small and medium-sized businesses can make good or at least reasonable decisions for themselves as well as the greater good for the community. I would suggest:

- determine how much we can contribute to our respective compliance efforts

- prioritize our security compliance efforts

- start with compliance items that allow us to balance what 1) makes us most secure, 2) contributes most to the security of the online community, and 3) (save ourselves here) could have the stiffest penalties for noncompliance

- Know what things that we are not complying with. Even if we can’t comply with everything, we still have to know what the law is. Have a plan, even if brief, for addressing the things that we currently can’t comply with. Even though the regulatory and compliance environment is complicated, it’s not okay to throw up our hands and pretend it’s not there.

I believe that the nature of our system in the US is to become increasingly regulatory, that is additive, and more taxing to comply with. However, we still need to know what the rules are and what the law is. From there, I believe, what defines the leadership and character of the small to medium size business or government entity is the choices that we make for ourselves and the greater good of the community.

[Governor & Maxwell Images: WikiMedia]

[Rolling Stone cover image: http://www.rollingstone.com/music/pictures/gallery-the-best-break-out-bands-on-rolling-stones-cover-20110502/guns-n-roses-1988-0641642]