Nice video from Scientific American on how Denial of Service (DoS) and Distributed Denial of Service (DDoS) attacks work …

Metaphors Amuck for CyberRisk

PW Singer wrote a great piece for the LA Times last month, “What Americans should fear in cyberspace.” . In the article, Singer drives home the point of the dangers and harm done of equating risks in cyberspace with historical physical and kinetic events such as Pearl Harbor and using language borrowed from the physical space — weapons of mass destruction, Cold War, etc.

By using such language, such poorly contemplated metaphors, actual risk is not communicated. Worse, misinformation (aka statements-&-proclamations-that-are-wrong) is the thread. Singer points out that instead of educating, we fear monger.

In my opinion, one reason for fear mongering with pithy armageddon-esque descriptions instead of providing education is two fold:

- it is easier to fear monger than it is to educate

- fear mongering titillates and sells advertising

None of this is to say that there is not a real challenge in communicating risk. There is a real challenge. As a society, we don’t have a basis for understanding this kind of risk. It’s much too new. In the shipping, financial, some health, and even sports industries, there are decades or centuries of actuarial data to work with. This industry has at most two decades, but even that is not terribly useful given the rate of change of the ecosystem and attack types.

Singer suggests studying other examples of how society has handled new (massive) ideas such as the story of the Centers for Disease Control and Prevention in public health. This seems like a great idea. (Right now, I wish I could think of more).

“The key is to move away from silver bullets and ever higher walls … “

Singer goes on to say that cyberrisk is here to stay and needs to be viewed as a new perennial management problem. Further, we need to acknowledge that attacks and degradation will happen and we need to plan for this. Planning for this and not wishing it away is building resilience. This, I believe, is the key. And with that enduring problem come the hard decisions of dedicating resources — whether from company revenue streams or ultimately taxpayer funds.

What metaphors can we use to better educate without fear mongering? How do you think national and business resilience should be funded?

[Image:Wikimedia Commons]

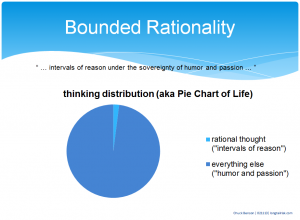

Chuck Benson’s Information Risk Management Video Lectures

My lectures on Information Risk Management are on deck again this week in the University of Washington & Coursera course Building an Information Risk Management Toolkit.

My lectures on Information Risk Management are on deck again this week in the University of Washington & Coursera course Building an Information Risk Management Toolkit.

(Use the link above & click on Video Lectures on left & then go to Week 9. The video “Bounded Rationality” is a good place to start. Just need e-mail & password to create a Coursera account if you don’t have one).

In search of a denominator

Winston Churchill is frequently quoted as saying, ‘Democracy is the worst form of government, except for all the other ones.’ I feel a similar thing might be said about risk management: ‘Risk management is the worst form of predictive analysis for making IT, Information Management, and cybersecurity decisions, except for all the other ones.’

Because there is so much complexity and so many factors and systems to consider in IT, Information Management, and cybersecurity, we resort to risk management as a technique to help us make decisions. As we consider options and problem-solve, we can’t logically follow every possible approach and solution through and analyze each one on its relative merits. There are simply way too many. So we try to group things into buckets of like types and analyze them very generally with estimated likelihoods and estimated impacts.

We resort to risk management methods, because we know so little about the merits of future options that we have to ‘resort to’ this guessing game. That said, it’s the best game in town. We have to remind ourselves that the reason that we are doing risk management to begin with is that we know so little.

In some ways, it is a system of reasoned guesses — we take our best stabs at the likelihood of events and what we think the impacts of events might be. We accept the imprecision and approximations of risk management because we have no other better alternatives.

Approximate approximations

It gets worse. In the practice of managing IT and cybersecurity risk, we find that we have trouble doing the things that allow us to make those approximations that are to guide us. Our approximations are approximations.

Managing risk is about establishing fractions. In theory we establish the domain over which we intend to manage risk, much like a denominator, and we consider various subsets of that domain as we group things into buckets for simpler analysis, the numerator. The denominator might be all of the possible events in a particular domain, or it might be all of the pieces of equipment upon which we want to reflect, or maybe it’s all of the users or another measure.

When we manage risk, we want to do something like this:

- Identify the stuff, the domain — what’s the scope of things that we’re concerned about? How many things are we talking about? Where are these things? Count these things

- Figure out how we want to quantify the risk of that stuff — how do we figure out the likelihood of an event, how do we want to quantify the impact?

- Do that work — quantify it, prioritize it

- Come up with a plan for mitigating the risks that we’ve identified, quantified, & prioritized

- Execute that mitigation (do that work)

- Measure the outcome (we did some work to make things less bad; we’re we successful at making things less bad?)

The problem is that in this realm of increasingly complex problems, the 1st step, the one in which we establish the denominator, doesn’t get done. We’re having a heck of a time with #1 — identifying and counting the stuff — and this is supposed to be the easy step. Often we’re surprised by this. In fact, sometimes we don’t even realize that we’re failing at step #1 — because it’s supposed to be the easy part of the process. However, the effects of BYOD, hundreds of new SaaS services that don’t require substantial capital investment, regular fluctuations in users, evolving and unclear organizational hierarchies, and other factors all contribute to this inability to establish the denominator. A robust denominator, one that fully establishes our domain, is missing.

Solar flares and cyberattacks

Unlike industries such as finance, shipping, and some health sectors, which can have sometimes hundreds of years of data to analyze, the IT, Information Management, and cybersecurity industries are very new and are evolving very rapidly. There is not the option of substantial historical data to assist with predictive analysis.

Unlike industries such as finance, shipping, and some health sectors, which can have sometimes hundreds of years of data to analyze, the IT, Information Management, and cybersecurity industries are very new and are evolving very rapidly. There is not the option of substantial historical data to assist with predictive analysis.

However, even firms such as Lloyd’s of London must deal with emerging risk for which there is little to no historical data. Speaking on attempting to underwrite risks from solar flare damages (of all things), emerging risks manager, Neil Smith says, “In the future types of cover may be developed but the challenge is the size of the risk and getting suitable data to be able to quantify it … The challenge for underwriters of any new risk is being able to quantify and price the risk. This typically requires loss data, but for new risks that doesn’t really exist.” While this is talking about analyzing solar flare risk, it can be readily applied to risk we have in cybersecurity and Information Management.

In an interview with Lloyds.com, reinsurer Swiss Re Corporate Solutions head of global markets, Oliver Dlugosch, says that he takes the following steps when evaluating an emerging risk:

- Is the risk insurable?

- Does the company have the risk appetite for this particular emerging risk?

- Can the risk be quantified (particularly in the absence of large amounts of historical data)?

Whence the denominator?

So back to the missing denominator. What to do? How do we do our risk analysis? Some options for addressing this missing denominator might be:

- Go no further until that denominator is established. (However, that is not likely to be fruitful as we may never get to do any analysis if we get hung up on step 1) OR

- Make the problem space very small so that we have a tractable denominator (but then our risk analysis is probably so narrow that it is not useful to us) OR

- Move forward with only a partial denominator and being ready to continually backfill that denominator as we learn more about our evolving environment.

I think there is something to approach #3. The downside is that with it we may feel we are departing what we perceive as intellectual honesty, scientific method, or possibly even ‘truthiness.’ However, it is better than #1 or 2 and better than doing nothing. I think we can resolve the intellectual honesty part to ourselves by 1) acknowledging that this approach will take more work (ie constantly updating the quantity and quality of the denominator) and 2) acknowledging that we’re going to know even less than we were hoping for.

So, more work to know less than we were hoping for. It doesn’t sound very appetizing, but as the world becomes increasingly complex, I think we have to accept that we will know less about the future and that this approach is our best shot.

“Not everything that can be counted counts. Not everything that counts can be counted.”

William Bruce Cameron (probably)

[Images: WikiMedia Commons]

Corporations better than government for your data ? (!!??!!)

Do you remember, in the movie Aliens, Hudson’s (played by Bill Paxton) enthusiastic concurrence to Ripley’s (Sigourney Weaver) proposal of “I say we take off and nuke the entire site from orbit. It’s the only way to be sure” ? I have similar enthusiasm for the point of this Guardian article — why in the world are we thinking that leaving our private data with megacorporations instead with the government is better? I’m not advocating giving it to the government, but I don’t understand all of the excitement about why leaving it with arguably less transparent corporations is better.

We need to be careful to not throw the baby out with the bath water.

Dethroning of “password” as #1 bad password

“password” has been replaced by the cryptographically clever “123456” according to Splashdata.

“password” has been replaced by the cryptographically clever “123456” according to Splashdata.

Where’s 8675309 when you need it? And Jenny for that matter?

Automation — another long tail

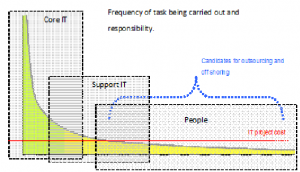

Descending frequency of different tasks moving left to right — and the who and what performs these tasks

Jason Kingdon illustrates what types of tasks are done by different parts of an organization and argues that that more use can be made of “Software Robots” to handle those many different types of tasks that occur with low frequency, aka in the long tail — stretching out to the right. (That is, each particular type of task in this region occurs with low frequency but there are many of these low frequency task types).

The chart shows how often particular tasks are carried out, ranked in descending order left to right, and who (or what) does the task — an organization’s core IT group, its support IT group, or end users.

- Core IT has taken on tasks such as payroll, accounting, finance, and HR

- Support IT has taken on roles such as CRM systems, business analytics, web support, and process management

- People still staff call centers to work with customers and people are still used to correct errors, handle invoices, and monitor regulation/compliance implementation.

Kingdon says that Software Robots mimic humans and work via the same interfaces that humans do, thereby making them forever compatible. That is, they don’t work at some deeper abstracted systems logic, but rather via the same interface as people. Software Robots are trained, not programmed and they work in teams to solve problems.

I think there is something to this and look forward to hearing more.

Highlights of Cisco 2014 Annual Security Report

- Report focuses on exploiting trust as thematic attack vector

- Botnets are maturing capability & targeting significant Internet resources such as web hosting servers & DNS servers

- Attacks on electronics manufacturing, agriculture, and mining are occurring at 6 times the rate of other industries

- Spam trends downward, though malicious spam remains constant

- Java at heart of over 90% of web exploits

- Watering hole attacks continue targeting specific industries

- 99% of all mobile malware targeted Android devices

- Brute-force login attempts up 300% in early 2013

- DDOS threat on the rise again to include integrating DDOS with other criminal activity such as wire fraud

- Shortage of security talent contributes to problem

- Security organizations need data analysis capacity

Top themes for spam messages:

- Bank deposit/payment notifications

- Online product purchase

- Attached photo

- Shipping notices

- Online dating

- Taxes

- Gift card/voucher

- Pay Pal

Cyber Command spending

Satellite communication systems vulnerable

Similar to other Industrial Control System (ICS) and Internet of Things (IoT) devices and systems, small satellite dishes called VSAT’s (very small aperture terminals) are also exposed to compromise. These systems are often used in critical infrastructure, by banks, news media, and also widely used in the maritime shipping industry. Some of the same problems exist with these systems as with other IoT and ICS:

Similar to other Industrial Control System (ICS) and Internet of Things (IoT) devices and systems, small satellite dishes called VSAT’s (very small aperture terminals) are also exposed to compromise. These systems are often used in critical infrastructure, by banks, news media, and also widely used in the maritime shipping industry. Some of the same problems exist with these systems as with other IoT and ICS:

- default password in use

- no password

- unnecessary communications services turned on (eg telnet)

According to this Christian Science Monitor article, cybersecurity group IntelCrawler reports 10,500 of these devices being exposed globally. Indeed, a quick Shodan search just now for ‘VSAT’ in the banner returns over 1200 devices.

The deployment of VSAT devices continues to rapidly grow with 16% growth demonstrated in 2012 (345,000 terminals sold) and 1.7 million sites in global service in 2012. 2012-2016 market growth is expected to be almost 8% for the maritime market alone.

Clearly, another area in need of buttoning up.

[Image:WikiMedia Commons]