As organizations, whether it be companies, government, or education, when we talk about managing information risk, it tends to be about desktops and laptops, web and application servers, and mobile devices like tablets and smartphones. Often, it’s challenging enough to set aside time to talk about even those. However, there is new rapidly emerging risk that generally hasn’t made it to the discussion yet. It’s the everything else part.

The problem is that the everything else might become the biggest part.

Everything else

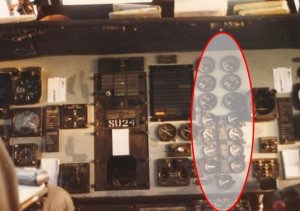

This everything else includes networked devices and systems that are generally not workstations, servers, and smart phones. It includes things like networked video cameras, HVAC and other building control, wearable computing like Google Glass, personal medical devices like glucose monitors and pacemakers, home/business security and energy management, and others. The popular term for these has become Internet of Things (IoT) with some portions also sometimes referred to as Industrial Control Systems (ICS).

The are a couple of reasons for this lack of awareness. One is simply because of the relative newness of this sort of networked computing. It just hasn’t been around that long in large numbers (but it is growing fast). Another reason is that it is hard to define. It doesn’t fit well with historical descriptions of technology devices and systems. These devices and systems have attributes and issues that are unlike what we are used to.

Gotta name it to manage it

So what do we call this ‘everything else’ and how do we wrap our heads around it to assess the risk it brings to our organizations? As mentioned, devices/systems in this group of everything else can have some unique attributes and issues. In addition to using the unsatisfying approach of defining these systems/devices by what they are not (workstations, application & infrastructure servers, and phones/tablets), here are some of the attributes of these devices and systems:

- difficult to patch/update software (& more likely, many or most will never be patched)

- inexpensive — there can be little barrier to entry to putting these devices/systems on our networks, eg easy-setup network cameras for $50 at your local drugstore

- large variety/variability — many different types of devices from many different manufacturers with many different versions, another long tail

- greater mystery to hardware/software provenance (where did they come from? how many different people/companies participated in the manufacture? who are they?)

- large numbers of devices — because they’re inexpensive, it’s easy to deploy a lot of them. Difficult or impossible to feasibly count, much less inventory

- identity — devices might not have the traditional notion of identity, such as having a device ‘owner’

- little precedent — not much in the way of helpful existing risk management models. Little policies or guidelines for use.

- everywhere — out-ubiquitizes (you can quote me on that) the PC’s famed Bill Gatesian ubiquity

- most are not hidden behind corporate or other firewalls (see Shodan)

- environmental sensing & interacting (Tommy, can you hear me?)

- comprises a growing fraction of Industrial Control and Critical Infrastructure systems

So, after all that, I’m still kind of stuck with ‘everything else’ as a description at this point. But, clearly, that description won’t last long. Another option, though it might have a slightly creepy quality, could be the phrase, ‘human operator independent’ devices and systems? (But the acronym ‘HOI’ sounds a bit like Oy! and that could be fun).

I’m open to ideas here. Managing the risks associated with these devices and systems will continue to be elusive if it’s hard to even talk about them. If you’ve got ideas about language for this space, I’m all ears.