IoT data pipelines require labor too

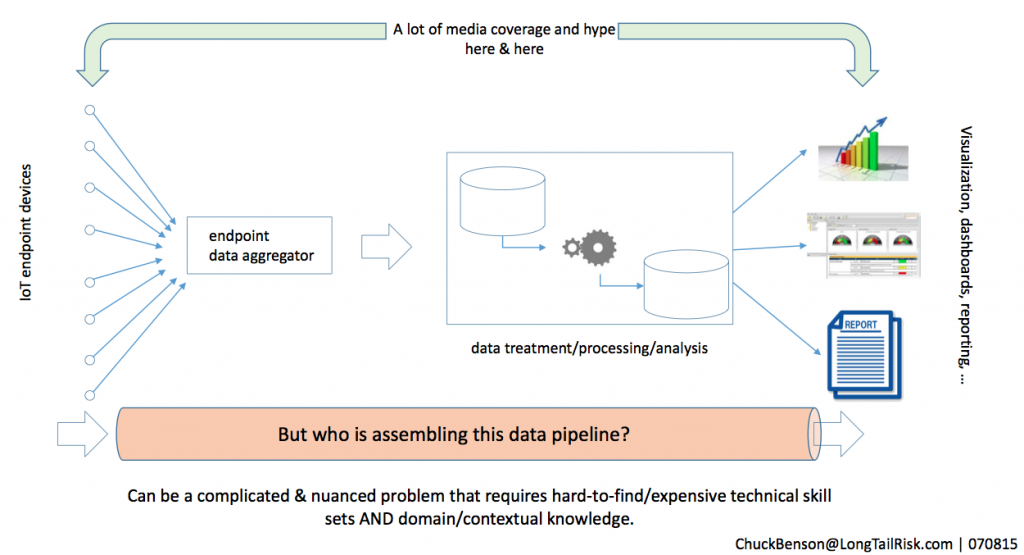

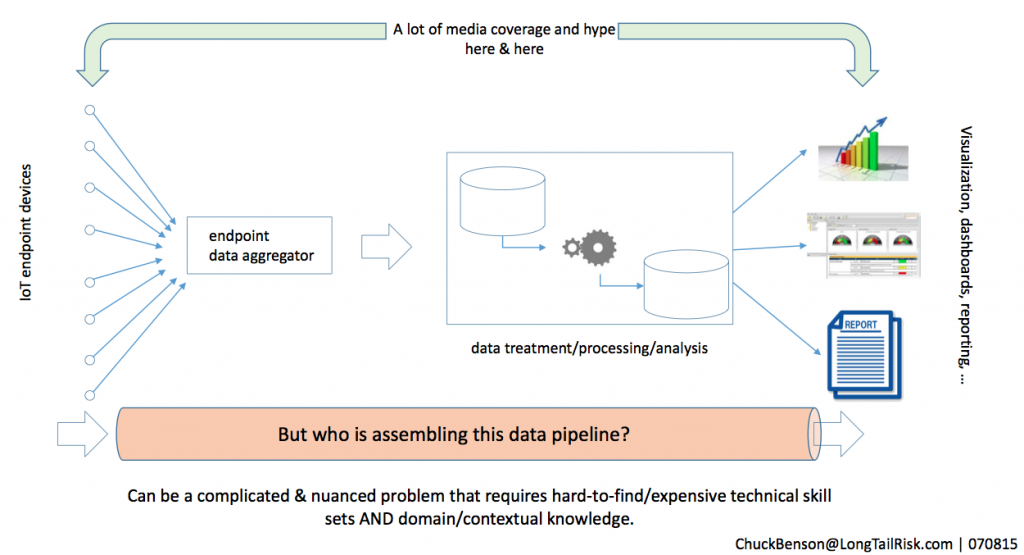

There is a lot of excitement around IoT systems for companies, institutions, and governments that sense, aggregate, and publish useful, previously unknown information via dashboards, visualizations, and reporting. In particular, there has been much focus on the IoT endpoints that sense energy and environmental quantities and qualities in a multitude of ways. Similarly, everybody and their brother has a dashboard to sell you. And while visualization tools are not yet as numerous, they too are growing in number and availability. What is easy to miss, though, in IoT sensor and reporting deployments is that pipeline of data collection, aggregation, processing, and reporting/visualization. How does the data get from all of those sensors and processes along the way so that it can be reported or visualized? This part can be more difficult than it initially appears.

Getting from the many Point A’s to Point B

While ‘big data’, analysis, and visualization have been around for a few years and continue to evolve, the new possibilities brought on by IoT sensing devices have been most recent news. Getting an individual’s IoT data from the point of sensing to a dashboard or similar reporting tool is generally not an issue. This is because data is coming from only one (or a few) sensing points and will (in theory) only be used by that individual. However, for companies, institutions, and governments that seek to leverage IoT sensing and aggregating systems to bring about increased operating effectiveness and ultimately cost savings, this is not a trivial task. Reliably and continuously collecting data from hundreds, thousands, or more points, is not a slam dunk.

Companies and governments typically have pre-existing network and computing infrastructure systems. For these new IoT systems to work, the many sensing devices (IoT endpoints) need to be installed by competent and trusted professionals. Further, that data needs to be collected and aggregated by a system or device that knows how to talk to the endpoints. After that (possibly before), the data needs to be processed to handle sensing anomalies across the array of sensors and from there, the creation of operational/system health data and indicators is highly desirable so that the new IoT system can be monitored and maintained. Finally, data analysis and massaging is completed so that the data can be published in a dashboard, report, or visualization. The question is, who does this work? Who connects these dots and maintains that connection?

who supplies the labor to build the pipeline?

The supplier of the IoT endpoint devices won’t be the ones to build this data pipeline. Similarly, the provider for the visualization/reporting technology won’t build the pipeline. It probably will default to the company or government that is purchasing the new IoT technology to build and maintain that data pipeline. In turn, to meet the additional demand on effort, this means that the labor will need to be contracted out or diverted from internal resources, both of which incur costs, whether direct cost or in opportunity cost.

Patching IoT endpoint devices – Surprise! it probably won’t get done

Additional effects of implementing large numbers of IoT devices and maintaining the health of the same include:

- the requirement to patch the devices, or

- accept the risk of unpatched devices, or

- some hybrid of the two.

Unless the IoT endpoint vendor supplies the ability to automatically patch endpoint devices in a way that works on your network, those devices probably won’t get patched. From a risk management point of view, probably the best approach is to identify the highest risk endpoint devices and try to keep those patched. But I suspect even that will be difficult to accomplish.

Also, as endpoint devices become increasingly complicated and have richer feature sets to remain competitive, they have increased ability to do more damage to your network and assets or those of others on the Internet. Any one of the above options increase cost to the organization and yet that cost typically goes unseen.

Labor leaks

Anticipating labor requirements along the IoT data pipeline is critical for IoT system success. However, this part is often not seen and leaks away. We tend to get caught up in the IoT devices at the beginning of the data pipeline and the fancy dashboards at the end and forget about the hard work of building a quality pipeline in the middle. In our defense, this is where the bulk of the marketing and sales efforts are — at each end of the pipeline. This effort of building a reliable, secure, and continuous data pipeline is a part of the socket concept that I mentioned in an earlier post, Systems in the Seam – Shortcomings in IoT systems implementation.

With rapidly evolving new sensing technologies and new ways to integrate and represent data, we are in an exciting time and have the potential to be more productive, profitable, safe, and efficient. However, it’s not all magical — the need for labor has not gone away. The requirement to connect the dots, the devices and points along the pipeline, is still there. If we don’t capture this component, our ROI from IoT systems investments will never be what we hoped it would be.